Every B2B growth leader knows the promise: apply AI to lead generation and watch your funnel accelerate. But the reality often falls far short. Most AI-driven lead gen pilots stall before they deliver meaningful results.

The reason isn’t the technology. It’s the foundations beneath it. Too many teams rush into automation without first clarifying who they’re targeting, cleaning their data, or setting governance in motion.

In this article you’ll discover:

- The key failure points that derail AI pilots.

- The best practices that the 5% of successful initiatives consistently apply.

- The leadership and governance moves that turn pilots into demand-generation systems, not just experiments.

Why most AI lead gen pilots fail?

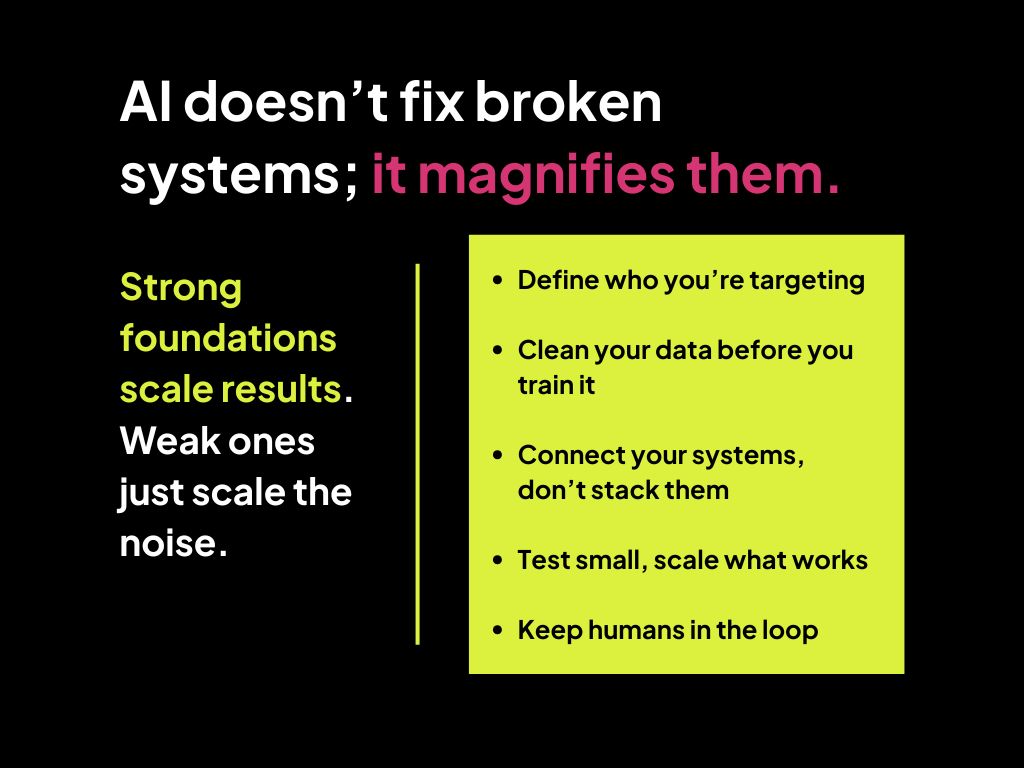

TL;DR: Most AI lead generation pilots fail because teams automate before they align. The biggest issues are unclear ICPs, poor data quality, disconnected systems, and the absence of basic structural hygiene like version control or testing environments. When the target audience is vague, the data is inconsistent, and workflows don’t talk to each other, AI only amplifies the chaos. The result isn’t smarter marketing—it’s faster failure.

AI can automate workflows, but it cannot fix broken systems. Most pilots fail because teams try to solve too much, too fast, without the structural readiness to make AI work.

Unclear ICP and weak targeting

AI can only optimise what you define. When the target audience is broad or outdated, the model learns from the wrong examples. In one audit, 40% of “qualified” leads turned out to be ghost accounts or incorrect job titles, a direct outcome of incomplete CRM data and undefined buyer profiles. Without a precise ICP, AI ends up finding volume, not value.

Poor-quality and inconsistent data

The majority of pilots underestimate how fragile data pipelines are. Records from different tools often use conflicting formats – job titles in one field, company names missing in another. When those errors flow through enrichment or scoring models, the entire system misfires. The result is what every operations team dreads: garbage in, garbage out.

Disconnected systems

Automation only delivers when every system speaks the same language. In many pilots, enrichment, scoring, and routing workflows sit in silos. Webhooks fail, API tokens expire, and data drops without alerting anyone. By the time someone notices, response rates have already fallen.

The fix isn’t another tool; it’s orchestration, clear ownership, and visibility across every workflow.

Building without structure

The most common and costly mistake is trying to do everything at once. Teams attempt to automate the entire funnel instead of validating one high-impact process first. With no database layer for state tracking, there’s no record of which leads were processed or lost.

With no version control or testing environment, a single change can break production, and no one can roll it back. This isn’t an AI problem. It’s a systems-design problem. Success depends on structure, not speed.

What Does It Take to Build an AI Lead Gen Pilot That Actually Works?

TL;DR: The 5% of AI pilots that succeed do a few things differently. They start small, test with real data, measure relentlessly, and build within real-world constraints. Instead of chasing perfection, they focus on creating visible value fast, and they design systems that can be debugged, maintained, and scaled. Success comes from discipline, not experimentation.

Start small and go deep

Successful teams don’t try to automate the entire funnel. They pick one high-impact process, like lead enrichment or routing, and make it work flawlessly before adding the next layer. A single end-to-end loop that works beats ten partial automations that fail in the gaps.

Use real data from day one

Sanitized test data hides the real issues. Working with 50 to 100 real prospects early exposes messy records, broken personalization, and compliance gaps before they scale. Real data surfaces real problems and solving them early builds credibility in the results.

Ship value in 30 days

Aim to deliver a concrete improvement within the first month. Early proof of value keeps the initiative funded and focused.

Measure obsessively

Track response rates, data accuracy, time saved, and cost per qualified lead weekly. For instance, if response rates drop 40%, you need to see it quickly so you can diagnose whether the cause is targeting, deliverability, or a technical break in personalization.

Build with production constraints

Prototype with the same limits you will live with at scale. If enrichment costs $5 per lead in a pilot, 50,000 leads a month becomes $250,000, which will not clear most budgets. Design for the economics you will actually run.

Engineer for reliability

Reliability is more valuable than speed. Every workflow should have error handling, retry logic, and alert systems that flag failures before they compound.

In production, resilience is what keeps campaigns running when APIs throttle or credentials expire.

Design for debuggability

Visibility drives trust. The best pilots log every step, API responses, timestamps, and record IDs, so teams can trace issues quickly and improve continuously.

When things break, a transparent system turns firefighting into problem-solving.

Why does leadership oversight and governance matter in AI pilots?

TL;DR: Why Human Oversight Still Defines AI Success

AI can accelerate execution, but only human leadership gives it direction. Pilots fail when there’s no clear ownership, no alignment on outcomes, and no governance to maintain integrity once systems go live.

The missing human layer

AI can automate process but it cannot replace judgment. Every pilot still needs someone who asks: Is this solving the right problem? Are we measuring the right things? Without that human filter, teams chase automation for its own sake, and systems drift from business reality.

Governance is what makes automation accountable

Governance brings clarity and rhythm to what AI builds. Leaders set success metrics, ensure compliance, and keep performance transparent. It’s less about managing code and more about ensuring the technology continues to serve strategic intent week after week, quarter after quarter.

Leadership turns experiments into systems

AI needs stewardship, not just setup. The right oversight connects data, people, and goals so pilots evolve into predictable marketing engines. This is where a Fractional CMO model proves valuable: it embeds senior judgment and operational discipline without adding overhead, ensuring that marketing experiments scale responsibly.

Conclusion

AI will continue to reshape how marketing teams find and qualify leads. But the brands that see real value from it aren’t the ones who move fastest; they’re the ones who move deliberately.

Every successful AI pilot has the same foundation: clear ICPs, clean data, connected systems, and committed oversight. Technology amplifies what already exists. If the base is strong, AI multiplies impact. If it’s weak, AI simply scales the chaos.

The difference comes down to leadership. Human judgment, strategic clarity, and consistent governance turn AI from a shiny experiment into a sustainable growth engine.